Data Centers Are Evolving; Here’s How to Keep Up

Download White PaperExecutive Summary

Key Takeaways:

- Hybrid cloud adoption is accelerating, creating demand for high-speed interconnects and secure on-premise + cloud architectures.

- AI/ML workloads require ultra-high-speed connectivity, advanced fiber, 400G/800G cabling, and robust power protection systems.

- Edge and distributed data centers are expanding to support latency-sensitive applications and rugged environments.

- Sustainability and energy efficiency are critical, with data centers adopting greener cabling and improved thermal performance strategies.

- Modernization is ongoing, with upgrades to Cat6A/Cat8, structured cabling, surge protection, and compliant security infrastructure.

As cloud computing becomes both more powerful and more accessible, companies and organizations are shifting some of their computing operations to the cloud – but by no means all. In fact, many enterprises remain committed to advancing their own on-premise computing resources but supplementing them with cloud services, increasingly embracing a hybrid computing strategy.

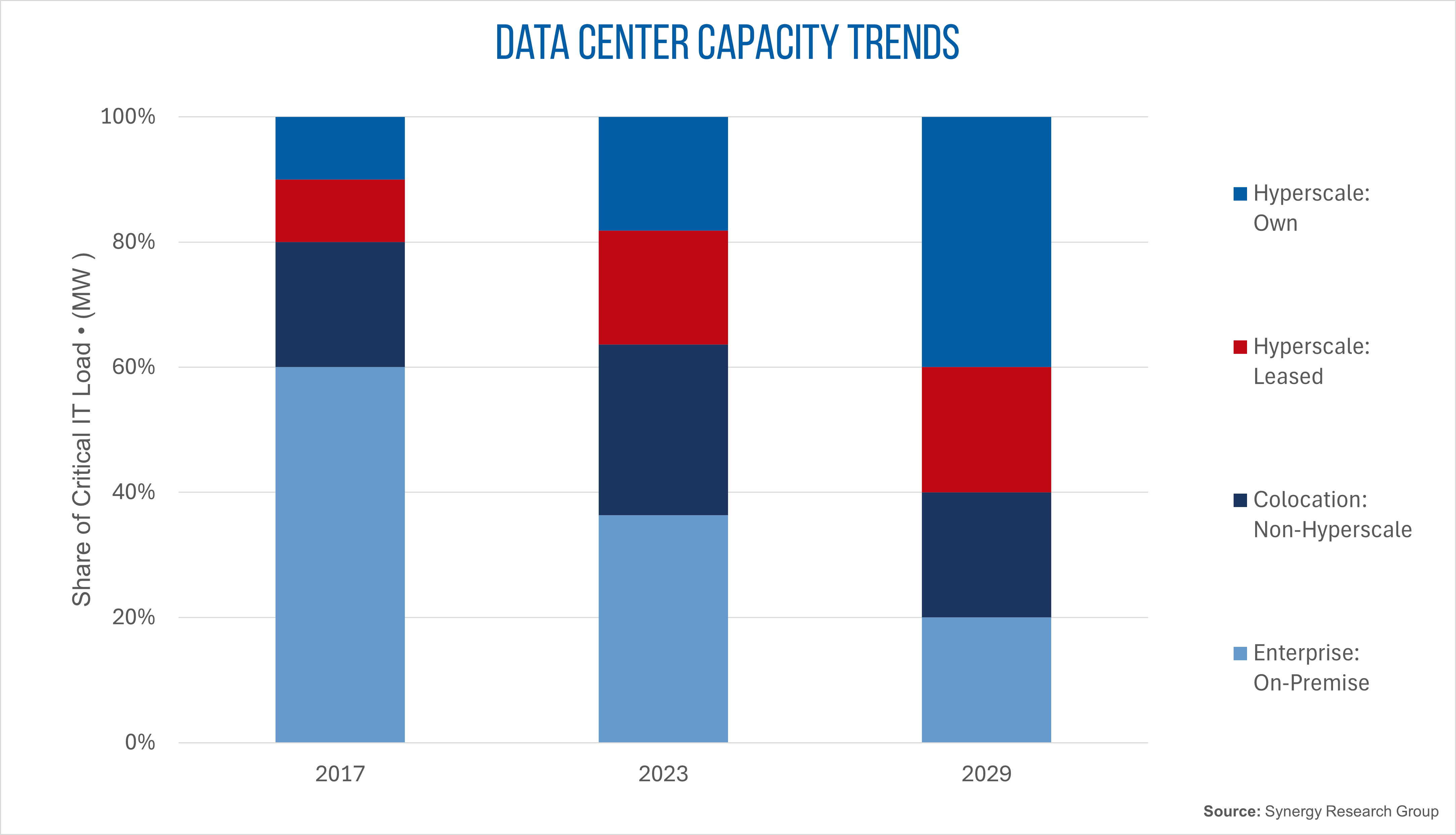

The largest segment of the data center market had been the enterprise segment, but that is changing. The hyperscaler segment grabs attention for phenomenal expansion rates, but all data center segments – cloud/hyperscale, enterprise/on-premise, high performance computing (HPC), co-location, and edge – are growing. Market share numbers may vary over time, but every segment contributes to making a bigger pie.

As the enterprise segment grows, it adapts to the same megatrends affecting the technology industry at large, including artificial intelligence (AI) the internet of things (IoT), and the increasing reliance on the cloud.

These trends feed into each other in a circular fashion. Data centers have the computing resources to make AI practical. AI makes it possible to analyze significantly larger volumes of data. The ability to process ever-growing data quantities makes it worth the effort to collect it, which gives impetus to the expansion of the IoT and edge computing. Greater use of AI and the spread of the IoT fuel the demand for expanded data center capacity and more data centers.

This arc of the virtuous circle has had a side effect. The industry is building more edge data centers and distributed data centers – smaller than hyperscale facilities and located closer to where data is generated, collected, and used.

All of this is naturally accompanied by relentless growth in network traffic, which necessitates the adoption of infrastructure that provides more bandwidth, allows greater data transfer rates, and is more reliable. Infrastructure requirements include Ethernet equipment and fiber optic cables, Ethernet cables, and surge protectors. Here we’ll explore key drivers leading to transformation in the enterprise segment of the data center market.

Infrastructure support for hybrid cloud

Enterprise operations opt to maintain their own data centers for a number of reasons including security for proprietary data, privacy for customer data, or the need for a computing structure that guarantees the absolute minimum latency.

Financial institutions are among the enterprises that put a premium on data security. Medical facilities have legal obligations to keep patient data private. Manufacturing operations are among those concerned with latency. Industry 4.0 involves real-time processing of data, and real-time operation by definition demands the minimal-possible latency.

Recent events provided a reminder of another reason for organizations to operate their own data centers. In October of 2025, a simple glitch at AWS triggered a widespread outage of web-based services that lasted for hours, disrupting operations for a wide range of organizations. Such risks can be hedged by operating one’s own backups.

The simultaneous adoption of cloud computing enables scalability and flexibility, provides an added measure of operational resilience, and can be more cost-effective in all of the above examples.

Maintaining a data center is, of course, resource-intensive. Enterprise operations can control investment by using the cloud to fulfill some computing needs. By moving to a hybrid cloud architecture, businesses can continue to meet industry standards while gaining the flexibility of the public cloud for less regulated computing operations.

The hybrid (or multi-cloud) architecture is clearly an increasingly attractive model in the enterprise world. The global hybrid cloud market was valued at $96.7 billion in 2023, and is growing at a compound annual growth rate in the upper teens, by one estimate. If that CAGR is maintained, this market would reach $480.2 billion by 2033.

With enterprises adopting hybrid models that combine on-premises data centers with public cloud resources, the need for reliable, high-speed interconnectivity has soared. Specifically, organizations investing in hybrid infrastructure require advanced cabling and connectivity products to bridge private and public environments.

This includes data center interconnect (DCI) using fiber optics and high-performance Ethernet cabling (10G, 40G, 100G), along with appropriate patch panels and cable management systems.

Infrastructure support for AI/ML

AI is still far from ubiquitous in electronics, but it is certainly pervasive and getting more so. The recent explosive success of AI and machine learning (ML) is predicated entirely on the existence of data centers. Only the largest data centers had the available computational resources to train the most sophisticated AI models, the types of models that make the most advanced large language models (LLMs) and other generative AI capabilities possible.

It is still early in the AI/ML era, and most AI training still requires the extensive computing resources of a data center. While AI/ML capabilities are gradually yet inexorably being pushed out to the network edge, it is still more practical to perform the majority of AI inference workloads in data centers.

The rise of AI accelerates the demand for specialized connectivity and power protection hardware. AI involves the collection, storage, and analysis of ever-expanding volumes of data. Networks require big, fast pipes to handle the volume, and because the flow of data relentlessly increases, the eventual requirement for even more bandwidth and throughput is easy to predict.

AI/ML workloads obviously place extraordinary demands on infrastructure. Today, enterprise data centers need ultra-high-speed cabling (400G, 800G), and direct attach copper (DAC) and active optical cables (AOC).

Data centers have strict requirements for uptime. Operational reliability is heavily dependent on power management, which makes robust, highly-reliable surge protectors and intelligent power distribution units (PDUs) a necessity.

Edge and Distributed Data Centers

Again, AI/ML capabilities are being pushed out to the network edge. It is possible to perform some AI/ML functions at the device level, in such products as pacemakers and continuous glucose monitors (CGMs), for example. These medical wearables demonstrate that extraordinary things can be accomplished with LLMs that have been pared to the bare minimum for specific applications, running on modest processors operating on the merest trickles of power.

Pacemakers and CGMs reveal the path to a more intelligent edge, but for now they are trailblazing outliers. They do their AI/ML processing locally because they must. Network latency is intolerable for medical wearables, but they must perform even when there is no network connection; latency sometimes doesn’t even come into play.

Most edge applications are connected, and it remains practical to perform many AI inference workloads in data centers. In these instances, latency most certainly is a factor.

To meet latency-sensitive use cases, edge data centers and distributed data centers are proliferating. These tend to be tailored for decentralized and often rugged environments, meaning that in addition to high-speed connectivity requirements, edge facilities will prioritize compact, resilient, and autonomous infrastructure solutions.

Additional needs of this smaller class of data centers are apt to include shielded and flexible cabling, low-bend-radius fiber optics, and – for reliability purposes – rack-mounted surge protectors and compact UPS units.

Datacom infrastructure

The trends outlined above combine to increase the volumes of data transmitted to data centers, accompanied of course by an increase in the results returned. The process of analyzing the streams of data fed into data centers, however, also involves an extraordinary amount of internal data movement. Reliable, high-speed, high-bandwidth communications is a necessity for data centers’ internal networks as well.

In the enterprise data center market, 25G to 100G is often sufficient. The connector market, on pace to be worth $12 billion by the end of 2025, is dominated by large public data centers that rely heavily on 400G and 800G.

Other data communications support systems include everything from transceivers to cables and, given the critical importance of network reliability, even power supplies (see Table 1). Reliability is critical regardless of the size or scale of the data center.

Sustainability and Efficiency in Data Centers

Before AI/ML, data centers were already consuming enough energy to rank as a distinct category in the rankings of industries consuming the most power globally. It was already a priority for the data center industry to minimize energy usage.

After AI/ML took off? The need to minimize energy usage has only become more acute because energy usage has increased. At data center scale, AI/ML workloads run most cost-effectively on graphics processing units (GPUs), which notably consume more energy than CPUs. Furthermore, the expectation for the foreseeable future is that every new generation of GPUs is going to be more power-hungry than the last.

Energy-efficient operations are driving demand for power-saving, eco-friendly, performance-optimized components. Green data center initiatives are reshaping component selection to prioritize plenum-rated and LSZH cables for safety and compliance, efficient cable organization to support thermal performance, and low-loss, high-performance connectivity solutions.

Retrofits and Modernization

Security and regulatory shifts are prompting higher standards and broader deployment of certified infrastructure products. Stricter compliance requirements and zero-trust security models necessitate certified, shielded cables and surge protectors; EMI-filtered and tamper-resistant solutions, and the use of segmented cabling architectures for secure networks.

Regulatory and Security Concerns

Security and regulatory shifts are prompting higher standards and broader deployment of certified infrastructure products. Stricter compliance requirements and zero-trust security models necessitate certified, shielded cables and surge protectors; EMI-filtered and tamper-resistant solutions, and the use of segmented cabling architectures for secure networks.

Strategies to Ensure Bandwidth and Performance

Data centers are subject to the latest technology trends and enablers for many of them. The practical results for many of these trends is the increase in volume of data traversing communications networks, entering and often passing through data centers. All of this necessitates infrastructure to handle it, including technologies that enable higher bandwidth and greater throughput, and technologies that make data centers more robust and resilient. Specifically, that includes components like cables and surge protectors.

By aligning offerings with these trends, data center operators and their vendors can remain competitive and relevant in the rapidly transforming data center ecosystem.

Download White PaperFrequently Asked Questions

Data centers are changing due to the rise of AI/ML, IoT, hybrid cloud models, and increasing data volumes that require higher bandwidth, faster processing, and more efficient infrastructure.

Hybrid cloud relies on high-speed data center interconnects, fiber optics, 10G–100G Ethernet cabling, patch panels, and reliable power management to bridge private and public systems.

AI workloads demand massive bandwidth, low-latency networks, specialized cabling like 400G/800G fiber, and resilient power distribution systems such as PDUs and surge protectors.

Edge facilities reduce latency for real-time applications, support distributed computing, and require rugged cabling, compact UPS systems, and low-bend-radius fiber solutions.

Data centers adopt LSZH/plenum-rated cables, optimized cable layouts for airflow, and high-efficiency components to reduce power consumption, especially with GPU-heavy AI systems.

Modernization includes replacing legacy copper with Cat6A–Cat8 or fiber, upgrading surge protection, improving cable management, and implementing secure, certified connectivity solutions.